|

Buy:

|

Fooled:

Teaching AI BIAS with Machine Learning

Note: Lesson plan and resources for this activity can be found at the bottom of this page.

Overview

|

In this lesson, students will explore the impact of bias in AI models. They will understand how bias can arise from cognitive biases or a lack of diverse data. Through hands-on activities and discussions, students will learn to identify and mitigate biases in AI systems.

Students will engage with Google's Teachable Machine to create and test AI models, gaining practical experience with biased datasets. They will experiment with image classification tasks, starting with unbalanced datasets and working towards improving their models by incorporating more diverse examples. By the end of the lesson, students will be able to share their improved models, explain the changes made to reduce bias, and discuss the real-world implications of biased AI. The assessment will focus on their participation, accuracy, and ability to demonstrate understanding and application of concepts related to bias in AI. |

Theory

Introduction: What is bias?

What is bias in AI?

The growing use of artificial intelligence in sensitive areas, such as recruitment, criminal justice system, and healthcare raises questions about bias and fairness. Bias in AI is commonly the result of either the cognitive bias unintentionally introduced by the engineer or lack of good training data.

Example of Cognitive Bias

An AI engineer who loves animals could subconsciously design a self-driving car to apply its brakes when there's an animal. Such self-driving cars will prioritise animals and might not be well-trained to brake if there’s a pedestrian. Inform the students that is an example of cognitive bias.

- Explain that being biased means to unfairly oppose or support someone or something and that humans show one or the other kind of bias without knowing about it.

- Give the example that you may like one shirt more than three others when given a choice because the shirt you picked is also your favourite colour. And, without realising it, you may have unconsciously picked the shirt because you have bias towards that colour.

What is bias in AI?

The growing use of artificial intelligence in sensitive areas, such as recruitment, criminal justice system, and healthcare raises questions about bias and fairness. Bias in AI is commonly the result of either the cognitive bias unintentionally introduced by the engineer or lack of good training data.

- Lack of data can cause bias as there is not enough data to represent a (few) category(s)

- AI Engineers may introduce their own biases to the AI model, unintentionally. This is called Cognitive bias

Example of Cognitive Bias

An AI engineer who loves animals could subconsciously design a self-driving car to apply its brakes when there's an animal. Such self-driving cars will prioritise animals and might not be well-trained to brake if there’s a pedestrian. Inform the students that is an example of cognitive bias.

Image created with Microsoft Copilot

Example of Lack of Data

Explain that a dataset that contains 80% of cat images and only 20% dog images would not be a good data to train an AI model to differentiate between cats and dogs. There is not enough data to represent dogs. This would result in the AI being biased when differentiating between cats and dogs. Inform the students that this is an example of lack of data.

Explain that a dataset that contains 80% of cat images and only 20% dog images would not be a good data to train an AI model to differentiate between cats and dogs. There is not enough data to represent dogs. This would result in the AI being biased when differentiating between cats and dogs. Inform the students that this is an example of lack of data.

Image created with Microsoft Copilot

Lesson Plan: Fooled

Understanding Bias in AI

This lesson is aligned with the National Curriculum in England: Secondary Curriculum (Key Stage 2/3), Curriculum for Wales (Digital Competence Framework), ISTE standards for students, and CSTA K-12 CS standards.

Lesson: Understanding Bias in AI with Teachable Machine

Overview:

In this lesson, students will explore the impact of bias in AI models. They will understand how bias can arise from cognitive biases or a lack of diverse data. Through hands-on activities and discussions, students will learn to identify and mitigate biases in AI systems.

Learning Objectives

Materials Needed

Curriculum Mapping

Lesson: Understanding Bias in AI with Teachable Machine

Overview:

In this lesson, students will explore the impact of bias in AI models. They will understand how bias can arise from cognitive biases or a lack of diverse data. Through hands-on activities and discussions, students will learn to identify and mitigate biases in AI systems.

Learning Objectives

- Understand the concept of bias and how it can manifest in AI models.

- Identify sources of bias in AI, such as cognitive bias and lack of data.

- Recognise the importance of diverse datasets in training AI models.

- Use Google's Teachable Machine to create and test a simple AI model.

Materials Needed

- Computers with internet access

- Projector and screen

- Worksheets for the hands-on activity (printed or digital)

- Access to teachablemachine.withgoogle.com

Curriculum Mapping

KS2 Computing:

- Use logical reasoning to explain how some simple algorithms work and to detect and correct errors in algorithms and programs.

KS3 Computing:

- Understand the hardware and software components that make up computer systems, and how they communicate with one another and with other systems.

Science and Technology AoLE:

- Progression Step 4: "I can analyze and evaluate the impact of digital technology on individuals, society and the environment."

Digital Competence Framework:

- Data and computational thinking strand: This strand focuses on problem-solving, turning ideas and data into models and understanding systems.

- Citizenship strand: This strand involves understanding the social and ethical implications of digital technology.

1.4 Innovative Designer:

- Students use a variety of technologies within a design process to identify and solve problems by creating new, useful, or imaginative solutions.

1.5 Computational Thinker:

- 5c: Students break problems into component parts, extract key information, and develop descriptive models to understand complex systems or facilitate problem-solving.

- 5d: Students understand how automation works and use algorithmic thinking to develop a sequence of steps to create and test automated solutions.

Algorithms and Programming (AP):

- 1B-AP-15: Test and debug (identify and fix errors) a program or algorithm to ensure it runs as intended.

Impacts of Computing (IC):

- 2-IC-20: Compare tradeoffs associated with computing technologies that affect individuals and society.

- 2-IC-21: Discuss issues of bias and accessibility in the design of existing technologies.

Starter Activity (10 minutes)

Introduction to Bias

Introduction to the Topic (10 minutes)

Understanding Bias in AI

Hands-on Activity (30 minutes)

Exploring Bias with Teachable Machine

Plenary (10 minutes)

Review and Reflection

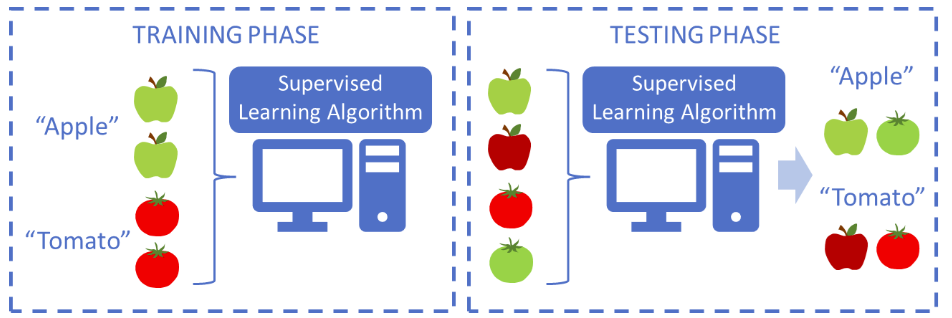

Inform the students that machine learning models learn to recognise patterns in what you use to train it. If all photos in a set have the same background, or the same lighting, or the same colour – then those can be patterns that the model uses to recognise pictures. Explain that, in the previous example, the machine learning algorithm might think that the red apple is a tomato because it identified red as a feature of all tomatoes based on your training data.

Introduction to Bias

- Begin with a brief discussion on what bias means in general terms. Use everyday examples, such as preferring one shirt over another (see examples above).

- Show an image or a simple video illustrating a biased decision (e.g., choosing a favourite colour).

- Ask students to think about and share their own examples of bias in daily life.

Introduction to the Topic (10 minutes)

Understanding Bias in AI

- Explain the significance of AI in modern technology, particularly in sensitive areas like recruitment, healthcare, and criminal justice.

- Introduce the concept of bias in AI and how it can arise from cognitive bias or lack of data (See examples above).

- Provide examples of cognitive bias (e.g., an AI prioritising animals over pedestrians) and lack of data (e.g., a dataset with more cat images than dog images).

Hands-on Activity (30 minutes)

Exploring Bias with Teachable Machine

- Guide students to access Google's Teachable Machine at teachablemachine.withgoogle.com.

- Divide students into small groups and provide each group with a set of images (e.g., pictures of apples and tomatoes).

- Share the 'fooled' worksheet, containing the instructions (see resources below). Instruct students to create an AI model using the Teachable Machine. They should train the model with a biased dataset (i.e., images of only green apples and red tomatoes).

- Once the model is trained, ask students to test it with a green apple, red tomato, and red apple and observe the results.

- Discuss the outcomes and identify any biases in the model's predictions.

- Allow students time to improve their models by adding more diverse examples to the dataset.

Plenary (10 minutes)

Review and Reflection

Inform the students that machine learning models learn to recognise patterns in what you use to train it. If all photos in a set have the same background, or the same lighting, or the same colour – then those can be patterns that the model uses to recognise pictures. Explain that, in the previous example, the machine learning algorithm might think that the red apple is a tomato because it identified red as a feature of all tomatoes based on your training data.

Share the following example of bias in AI and discuss the potential consequences:

Research into object recognition in self-driving vehicles by Georgia Tech (source: https://arxiv.org/pdf/1902.11097.pdf) revealed that pedestrians with dark skin were hit about 5% more often than people with light skin. They found that the data used to train the AI model was likely the source of the injustice: the data set contained about 3.5 times as many examples of people with lighter skin, so the AI model could recognise them better.

Explain that these seemingly small differences could have had deadly consequences when it comes to something as potentially dangerous as self-driving cars wrongly classifying pedestrians.

Assessment Criteria

Research into object recognition in self-driving vehicles by Georgia Tech (source: https://arxiv.org/pdf/1902.11097.pdf) revealed that pedestrians with dark skin were hit about 5% more often than people with light skin. They found that the data used to train the AI model was likely the source of the injustice: the data set contained about 3.5 times as many examples of people with lighter skin, so the AI model could recognise them better.

Explain that these seemingly small differences could have had deadly consequences when it comes to something as potentially dangerous as self-driving cars wrongly classifying pedestrians.

Assessment Criteria

- Participation in discussions and activities.

- Ability to identify and explain examples of bias in AI models.

- Demonstrated improvement in AI models through the addition of diverse data.

Step-by-step instructions:

| fooled_worksheet.pdf |

Working files

| working_files.zip |