|

Buy:

|

Moral Machine:

Teaching AI BIAS with Machine Learning

Note: Lesson plan and resources for this activity can be found at the bottom of this page.

Overview

Outline

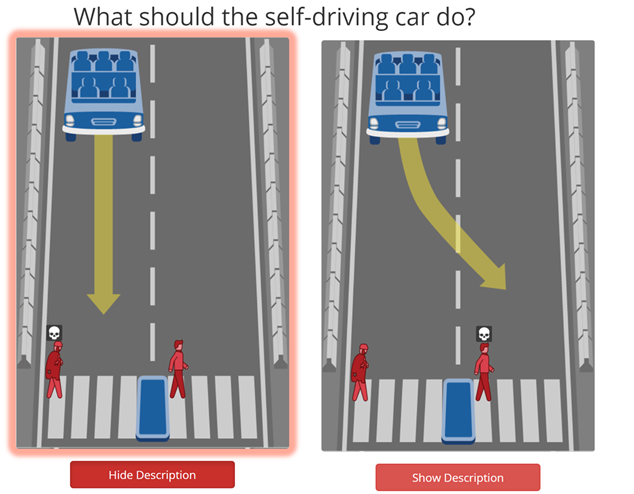

The ‘Moral Machine’ (moralmachine.net) is a game created by the team at Massachusetts Institute of Technology (MIT) that explores the ethical and moral dilemmas involved in creating AI for driverless vehicles. The game, which is based on the famous ‘trolley problem’, challenges players to choose who to save when the brakes fail on a driverless vehicle.

The Moral Machine poses basic choices such as ‘Should a self-driving car full of senior citizens crash to avoid a group of school children?’ and ‘Is it OK to run over two criminals in order to save one nurse?’ Inform the students that they are going to play a game that will explore the ethical and moral dilemmas faced in designing AI for driverless vehicles.

Split the class into groups of four and explain to the students that they must work as a team through each of the scenarios. Encourage the students to debate their position before taking a vote on each scenario. Facilitate discussion on the results and note any similarities or differences between each of the teams’ decisions (using the statistics from Moral Machine).

The Moral Machine poses basic choices such as ‘Should a self-driving car full of senior citizens crash to avoid a group of school children?’ and ‘Is it OK to run over two criminals in order to save one nurse?’ Inform the students that they are going to play a game that will explore the ethical and moral dilemmas faced in designing AI for driverless vehicles.

Split the class into groups of four and explain to the students that they must work as a team through each of the scenarios. Encourage the students to debate their position before taking a vote on each scenario. Facilitate discussion on the results and note any similarities or differences between each of the teams’ decisions (using the statistics from Moral Machine).

Instructions

Show the students how to use the ‘Moral Machine’ - https://moralmachine.mit.edu/ - select ‘Start Judging’ and click on ‘Show Description’.

When complete, students will be able to compare their responses to others based on the following 9 criteria:

1. Saving more lives

2. Protecting passengers

3. Upholding the law

4. Avoiding intervention

5. Gender preference

6. Species preference

7. Age preference

8. Fitness preference

9. Social value preference

As a class, or in groups, discuss the following questions:

When complete, students will be able to compare their responses to others based on the following 9 criteria:

1. Saving more lives

2. Protecting passengers

3. Upholding the law

4. Avoiding intervention

5. Gender preference

6. Species preference

7. Age preference

8. Fitness preference

9. Social value preference

As a class, or in groups, discuss the following questions:

- What are the results of your moral test?

- Now you have seen them would you make different decisions?

- Is there any bias in your decision making?

- Are your decisions based on judgement of common/most good? For example:

- A baby has longer to live than an old person?

- Crossing on a red light is breaking the rules so it is their own fault?

Lesson Plan: Moral Machine

explore the ethical and moral dilemmas involved in creating AI for driverless vehicles

This lesson is aligned with the National Curriculum in England: Secondary Curriculum (Key Stage 2/3), Curriculum for Wales (Digital Competence Framework), ISTE standards for students, and CSTA K-12 CS standards.

Lesson Plan: Moral Machine and the Implications of AI

Overview

In this lesson, students will explore the ethical and moral dilemmas involved in creating AI for driverless vehicles using the 'Moral Machine' game. The lesson will consist of a starter activity to introduce the topic, an interactive introduction, a hands-on activity, and a plenary session to gauge understanding.

Learning Objectives

Materials Needed

Curriculum Mapping

Lesson Plan: Moral Machine and the Implications of AI

Overview

In this lesson, students will explore the ethical and moral dilemmas involved in creating AI for driverless vehicles using the 'Moral Machine' game. The lesson will consist of a starter activity to introduce the topic, an interactive introduction, a hands-on activity, and a plenary session to gauge understanding.

Learning Objectives

- Understand the ethical and moral dilemmas in designing AI for driverless vehicles.

- Develop skills in collaborative decision-making and ethical reasoning.

- Analyse the implications of AI decisions on society.

Materials Needed

- Computers with internet access

- Access to the Moral Machine website (moralmachine.mit.edu)

- Projector and screen for demonstration

- Handouts with discussion questions and instructions

- Spreadsheet software (e.g., Google Docs or Teams)

Curriculum Mapping

KS2 Computing:

- Use logical reasoning to explain how some simple algorithms work and to detect and correct errors in algorithms and programs.

KS3 Computing:

- Understand the hardware and software components that make up computer systems, and how they communicate with one another and with other systems.

Science and Technology AoLE:

- Progression Step 4: "I can analyze and evaluate the impact of digital technology on individuals, society and the environment."

Digital Competence Framework:

- Data and computational thinking strand: This strand focuses on problem-solving, turning ideas and data into models and understanding systems.

- Citizenship strand: This strand involves understanding the social and ethical implications of digital technology.

- 1.2 Digital Citizen: Students recognize the rights, responsibilities and opportunities of living, learning and working in an interconnected digital world, and they act and model in ways that are safe, legal and ethical.

- 1.5c Computational Thinker: Students break problems into component parts, extract key information, and develop descriptive models to understand complex systems or facilitate problem-solving.

- 1.7 Global Collaborator: Students use digital tools to broaden their perspectives and enrich their learning by collaborating with others and working effectively in teams locally and globally.

Algorithms and Programming (AP):

- 1B-AP-15: Test and debug (identify and fix errors) a program or algorithm to ensure it runs as intended.

Impacts of Computing (IC):

- 2-IC-20: Compare tradeoffs associated with computing technologies that affect individuals and society.

- 2-IC-21: Discuss issues of bias and accessibility in the design of existing technologies.

- 3A-IC-24: Evaluate the ways computing impacts personal, ethical, social, economic, and cultural practices.

Lesson Outline

Starter (10 mins)

Objective: Introduce the concept of ethical dilemmas in AI.

Introduction (10 mins)

Objective: Introduce the Moral Machine game and the ethical dilemmas it presents.

Hands-On Activity (30 mins)

Objective: Students will play the Moral Machine game, discuss their decisions, and analyse the results.

Plenary (10 mins)

Objective: Gauge student understanding and reflect on the ethical implications of AI decisions.

As a class, discuss the following questions:

Ask students to consider the following question:

Assessment Criteria

Starter (10 mins)

Objective: Introduce the concept of ethical dilemmas in AI.

- Play the video above (https://youtu.be/XCO8ET66xE4)

- Think-Pair-Share: Ask students to think about and discuss with a partner what they know about driverless cars and any ethical issues that might arise.

- Share some pairs' ideas with the class and write key points on the board.

- Explain the basics of the 'trolley problem' (see above) and how it applies to AI in driverless vehicles.

Introduction (10 mins)

Objective: Introduce the Moral Machine game and the ethical dilemmas it presents.

- Explain the Moral Machine game and its purpose in exploring AI ethics.

- Demonstrate how to use the Moral Machine website: Select 'Start Judging' and 'Show Description' to view scenarios.

- Discuss the 9 variables that influence decision-making (e.g. saving more lives, protecting passengers, upholding the law, etc.).

Hands-On Activity (30 mins)

Objective: Students will play the Moral Machine game, discuss their decisions, and analyse the results.

- Split the class into groups of four and direct them to the Moral Machine website.

- Instruct students to work as a team to go through each scenario and debate their positions before voting.

- After completing the game, ask the students to save their results.

- Facilitate a discussion on the results by comparing the scores from different groups. Tip: You could get the groups to enter the scores for each of the 9 categories using a shared spreadsheet.

Plenary (10 mins)

Objective: Gauge student understanding and reflect on the ethical implications of AI decisions.

As a class, discuss the following questions:

- What are the results of your moral test?

- Would you make different decisions now that you have seen the results?

- Is there any bias in your decision-making?

- Are your decisions based on judgment of the common/most good?

Ask students to consider the following question:

- If systems are making decisions about right and wrong, is there a correct decision?

Assessment Criteria

- Understanding of ethical and moral dilemmas in AI.

- Participation in discussions and activities, demonstrating engagement and curiosity.

- Quality of reflections and insights during discussions and plenary.

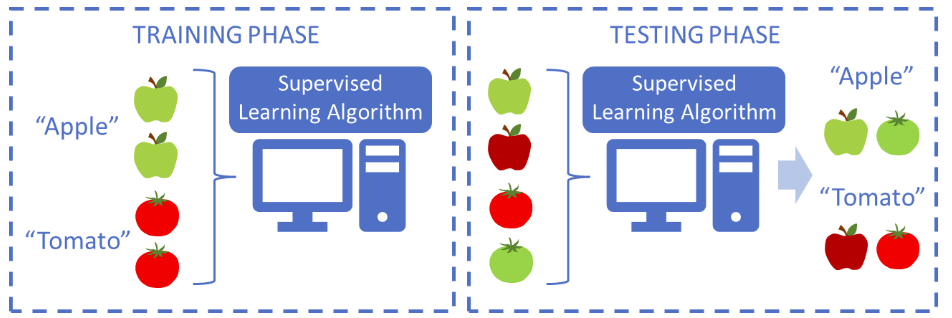

Share the following example of bias in AI and discuss the potential consequences:

Research into object recognition in self-driving vehicles by Georgia Tech (source: https://arxiv.org/pdf/1902.11097.pdf) revealed that pedestrians with dark skin were hit about 5% more often than people with light skin. They found that the data used to train the AI model was likely the source of the injustice: the data set contained about 3.5 times as many examples of people with lighter skin, so the AI model could recognise them better.

Explain that these seemingly small differences could have had deadly consequences when it comes to something as potentially dangerous as self-driving cars wrongly classifying pedestrians.

Assessment Criteria

Research into object recognition in self-driving vehicles by Georgia Tech (source: https://arxiv.org/pdf/1902.11097.pdf) revealed that pedestrians with dark skin were hit about 5% more often than people with light skin. They found that the data used to train the AI model was likely the source of the injustice: the data set contained about 3.5 times as many examples of people with lighter skin, so the AI model could recognise them better.

Explain that these seemingly small differences could have had deadly consequences when it comes to something as potentially dangerous as self-driving cars wrongly classifying pedestrians.

Assessment Criteria

- Participation in discussions and activities.

- Ability to identify and explain examples of bias in AI models.

- Demonstrated improvement in AI models through the addition of diverse data.

Step-by-step instructions:

| fooled_worksheet.pdf |

Working files

| working_files.zip |